- Create

- Market

- Scale

create

market

A/B testing — also called split testing — compares two different variations of the same marketing campaign to see which version is the most successful. Unlike multivariate testing, split tests only compare one variable at a time. A/B tests are indispensable for the data-driven marketer, allowing you to make decisions based on real-time user behavior. Read on for seven A/B testing examples — as well as information on how to run an A/B test and how to use split testing effectively!

Split testing is a straightforward process that can be used in any number of marketing efforts. These are some of the most common applications for A/B tests that almost any business owner or marketer can employ with the campaigns they are already running!

Your email list is an owned asset and one of the most regularly used marketing channels in every type of industry. Many companies have established email campaigns with a long history of helpful data analytics and plenty of content to work with.

Any type of email campaign can be A/B tested, including newsletters, announcements, promotions, and lead nurturing sequences. Examples of email elements to A/B test include:

It may be overwhelming to see just how many variables can be A/B tested. Prioritize your list based on the most meaningful elements of your email campaign and focus your efforts on those.

Want to know more about how to A/B test an email? Find details in our guide on split testing email campaigns.

Your website is one of your most important sales and marketing channels because it’s the primary means of getting in front of online customers. Buyers in the digital age expect to be able to search for your company and learn all they need to know even before contacting you.

Web pages such as your homepage, checkout page, or services pages are crucial for getting the attention of website visitors and moving them forward in your sales funnel. A/B testing helps you discover which messages and design elements resonate most with your target audience, allowing you to optimize conversion rates and keep customers engaged with your online brand. Consider A/B testing elements like the following:

Tip: If you’re stuck figuring out which parts of your website are worth testing, use heatmap software to identify which sections get the most (or least) interest.

Pay-per-click (PPC) ads on social media or search engines can drive up lead acquisition costs if they are not optimized to perform as efficiently as possible. Split testing helps businesses zero in on exactly the right message and ad placement to bring in qualified leads. There are a wide variety of ad elements that can be tested and refined, such as:

If your business has a substantial social media following, it may be a smart idea to A/B test aspects of your organic posts. Developing high-quality social media content takes significant time and effort, and you want to be sure all that hard work is giving you the best results possible. Here are some ideas for what to split test on your social media posts:

Remember — A/B tests are not multivariate tests. Split tests are only accurate when you test one variable at a time. For example, if you’re testing two images, don’t change the caption, call to action, or any other element of the posts besides the images. Otherwise, you won’t know which change made the difference!

A lead capture form is essential for every online sales funnel. But it’s not just a practical necessity for getting contact information — it’s also part of your lead generation strategy. To effectively grow your email list, lead capture forms need to be persuasive, easy to use, and tailored to the right audience. Here are a few variables of lead capture forms that may benefit from A/B tests:

Pricing is an important part of brand perception. The way you present the cost of your products or services needs to be both informative and persuasive. Consider how you can use A/B tests to improve your pricing page and more powerfully convey the value of your offering. Many elements of your pricing display could be tested, including:

The checkout process is an important touchpoint in the customer journey. If it’s confusing or difficult to purchase from your brand, potential customers may move on to a competitor. And even if your checkout flow creates a great customer experience, you may not be leveraging it to your advantage. Here are some ideas for checkout improvements that can be split tested:

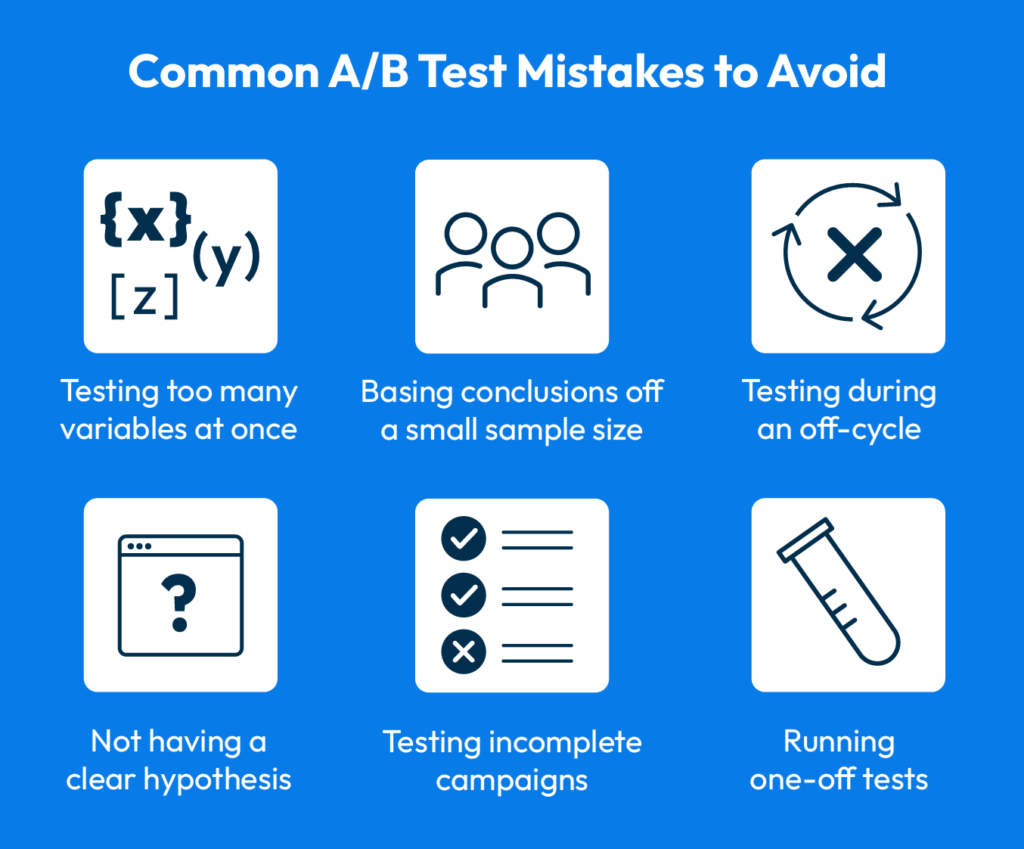

A/B tests are like scientific experiments, with data-driven results that are easily compromised by human error. These are the six guidelines we recommend to obtain usable test results.

If you don’t lay the proper groundwork for your A/B test, you might end up with two campaigns that perform differently, but you don’t know what’s driving the divergence or how to interpret the results.

The only way A/B testing works is if you start with a hypothesis — a theory that changing a particular variable (such as a CTA button) will improve a particular outcome (increased clicks). The best A/B tests are built on researched hypotheses with clear goals that have a measurable impact on your marketing plan.

The difference between a true split test and a mere comparison is having a specific, measurable goal for your test. Choosing a measurable goal requires working with an existing campaign and having access to metrics that track the results. Key performance indicators (KPIs) of an A/B test might include:

An A/B test consists of a control campaign and a test campaign. The control campaign is your existing piece — it shouldn’t be changed in any way. The test campaign is the new version containing one experimental variable and no other changes.

Be careful to conduct your control and test campaigns in the exact same way except for the element being tested. Incorporating too many changes is a common A/B testing mistake that will compromise your results. You won’t know which modifications are affecting campaign performance!

A/B tests are run simultaneously to achieve the most accurate results. Email, website, and advertising platforms – for example – make it simple to deliver the two different versions to equal portions of your audience. But be careful that your test doesn’t overlap with a holiday, busy season, or any other time that could skew the results, unless those distinct time frames are part of your test.

How long to run your test depends on what length of time allows you to detect true behavior patterns. This number will vary by campaign. A statistically significant timeline is one that gives you enough data to draw accurate conclusions.

For example, an advertisement may need to run for a week or more before you start comparing results — while a once-a-month email newsletter may only need 48 hours before you can detect differences between your new campaign and baseline engagement. Observe campaign histories to understand how long it usually takes your audience to interact with content, then you’ll know when to assess results.

Statistical significance in A/B testing means you can prove a direct link between the change that you made and improved campaign performance. Human error or chance are major factors in any user behavior experiment, and your test will need to draw conclusions only from the results you can confidently trace back to a specific variable.

To achieve statistically significant results, your campaign must have enough engagement that the test results are indicative of true patterns in user behavior. If your sample size is too small, you won’t know if performance differences are due to your variant or to chance. The larger your sample size is, the more meaningful the changes in performance metrics become.

A good rule of thumb for email marketing is to wait until your list reaches at least 1,000 subscribers before testing changes. A lower number may not give you an accurate picture of your target audience.

You’ll also need to consider the quantity of data you’re working with beyond the size of the audience. For example, if you are testing a website page for the purpose of conversion rate optimization (CRO), keep in mind both the number of visitors to your website as well as current conversion rates. If the landing page isn’t optimized to convert traffic, you won’t have enough existing conversion data to make an A/B test worthwhile. The page might need an overhaul to align with its purpose before benefitting from A/B tests.

A/B tests only give meaningful insights when they’re carried out with precision and forethought. Of course, not every test will give you positive results. Sometimes a hypothesis just isn’t true, or a variable isn’t as impactful as you thought it was. That’s just a part of the process!

However, there are a few A/B testing mistakes that will definitely skew the results. Steer clear of these common errors, and you’ll help ensure that your A/B testing efforts pay off.

At the most basic level, A/B tests determine the superior version of a given marketing campaign by experimenting with changes to content or design. Split tests can compare seemingly minuscule elements of a marketing piece, such as a CTA button color, or larger variables such as the right time of day to send company emails.

Additionally, A/B test results can influence product positioning and other high-level components of your brand strategy when used correctly. Follow the principles below to get the most out of your A/B tests.

For A/B tests to reveal deeper insights about your audience and company strategy, you’ll have to run tests consistently. Being “data-driven” is an ongoing process where businesses continually identify areas of improvement, test hypotheses, and optimize campaigns based on user behavior.

One-off tests may help to solve specific problems for a short period of time, but testing won’t be a driving force behind your marketing strategy until it is part of your regular data-gathering efforts. It’s also important to remember that A/B test results are finite. Testing does not lead to one-and-done solutions, since markets and customer needs shift over time.

Rather than thinking of A/B testing in a vacuum, maximize the benefits of your findings by cross-applying results. Consider how test insights can help you hone your marketing strategy and improve other marketing campaigns.

For example, a business might discover through a series of A/B tests that email subject lines with a casual tone have a higher open rate than subject lines with their usual, formal tone. While this is a helpful insight for future email marketing campaigns, it also says something about this company’s audience. The business might benefit from a more approachable brand voice across the board, not just in emails. Now they have a new hypothesis to test!

Marketing software makes data-driven testing accessible to business owners and ensures far more accurate results than manual tests ever could. Automated A/B tests randomly divide your campaigns between even numbers of users so the data is accurate and unbiased.

Split testing is so integral to digital marketing efforts that you’re likely to see this feature in most marketing tools. Email marketing platforms and landing page software let brands run automated tests on their assets, and many digital advertising platforms include native A/B testing tools.

Kartra’s all-in-one marketing software makes the A/B testing process even more effective by consolidating marketing tools into one platform:

With an all-in-one tool like Kartra, you could run tests on website pages by adjusting no-code templates — no need to coordinate with a web designer. And you’ll get access to data analytics from all of your marketing channels in one place. Having a birds’ eye view of the user experience means you won’t need to cross-apply data between independent dashboards. An all-in-one platform saves time and the cost of multiple subscriptions — and it gives you more control over your marketing strategy!

Kartra’s all in one marketing software has everything you need to run powerful A/B tests

This blog is brought to you by Kartra, the all-in-one online business platform that gives you every essential marketing and sales tool you need to grow your business profitably – from sales pages and product carts to membership sites, help desks, affiliate management and more. To learn how you can quickly and easily leverage Kartra to boost your bottom-line, please visit kartra.com.